Cognitive flexibility, the ability to rapidly switch between different thoughts and mental concepts, is a crucial human trait that allows for multi-tasking, quick skill acquisition, and adaptation to new situations. While artificial intelligence (AI) systems have made significant advancements, they still lack the same level of flexibility as humans when it comes to learning new skills and switching between tasks.

Recent research conducted by a team of computer scientists and neuroscientists from New York University, Columbia University, and Stanford University focused on training a single neural network to perform 20 related tasks. The objective was to investigate how neural networks are able to perform modular computations that allow them to tackle various tasks efficiently. The study revealed the presence of “dynamical motifs,” which are recurring patterns of neural activity that facilitate specific computations through dynamics.

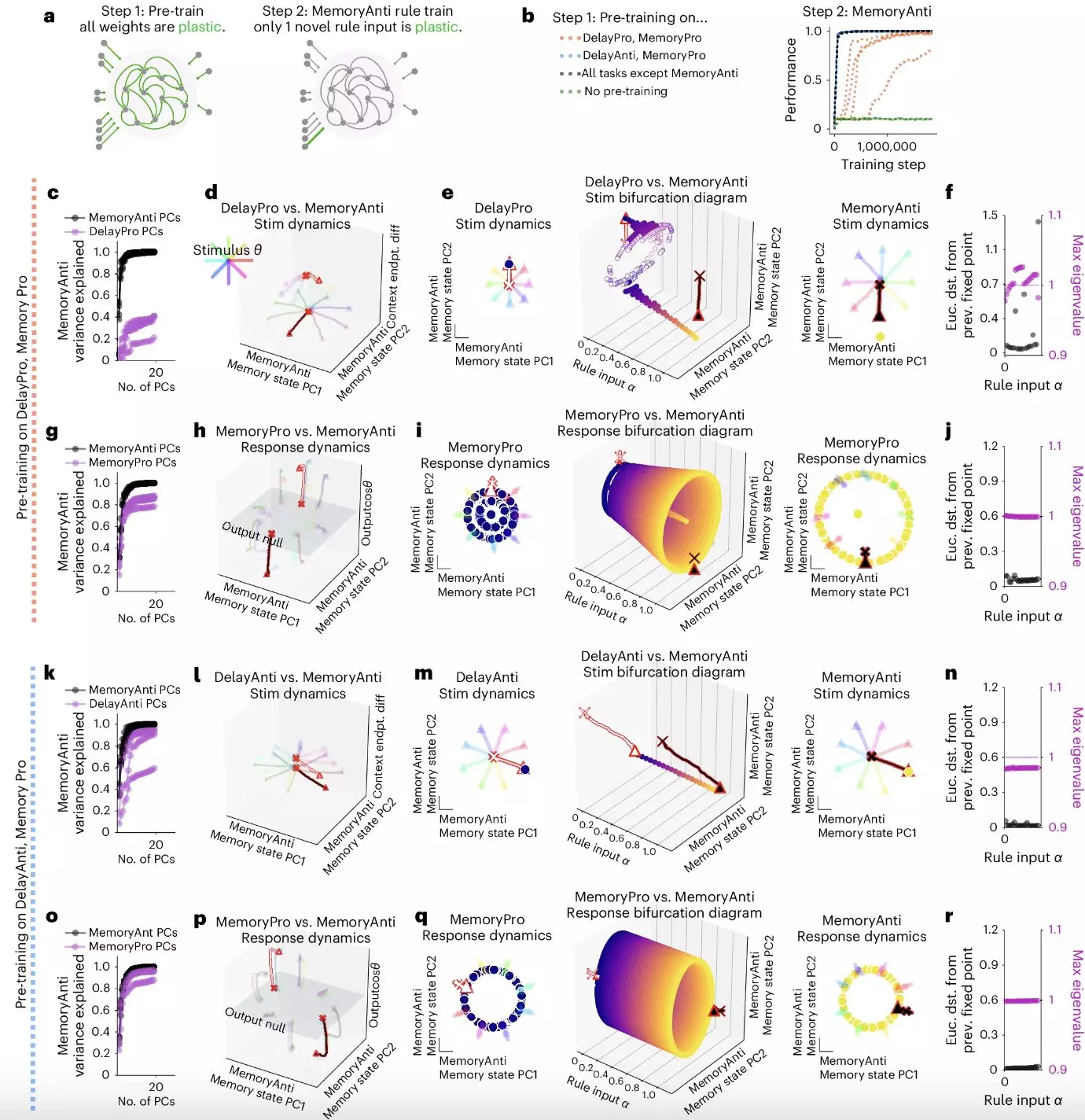

Through their research, Driscoll, Shenoy, and Sussillo were able to identify a computational substrate in recurrently connected artificial neural networks that enables modular computations. The study showed that dynamical motifs were reused across tasks, such as ring attractors being repurposed for tasks requiring memory of continuous circular variables. Moreover, it was found that lesions to units implementing dynamical motifs affected the networks’ ability to perform modular computations.

The researchers also discovered that motifs in convolutional neural networks were implemented by clusters of units with positive activation functions. These motifs were reconfigured for fast transfer learning, demonstrating their essential role in compositional computation. The study establishes dynamical motifs as a fundamental unit of computation, bridging the gap between individual neurons and the network as a whole.

The findings of this study have significant implications for both neuroscience and computer science research. Understanding the role of dynamical motifs in neural networks could lead to a better comprehension of the processes underlying cognitive flexibility. By emulating these processes in artificial neural networks, researchers may be able to develop more flexible AI systems that are capable of rapidly switching between tasks and learning new skills effectively.

The study conducted by Driscoll, Shenoy, and Sussillo sheds light on the importance of dynamical motifs in enabling cognitive flexibility in artificial neural networks. By identifying these recurring patterns of neural activity, researchers have opened up new avenues for exploring the mechanisms that support multi-tasking and adaptation. This research paves the way for future studies that aim to enhance the flexibility of AI systems and deepen our understanding of the intricate processes that underlie cognitive flexibility in humans.

Leave a Reply