Self-driving cars have been faced with occasional crashes due to limitations in their visual systems. The inability to process static or slow-moving objects in 3D space has posed significant challenges. However, researchers at the University of Virginia School of Engineering and Applied Science have taken inspiration from the praying mantis to develop artificial compound eyes that address these limitations.

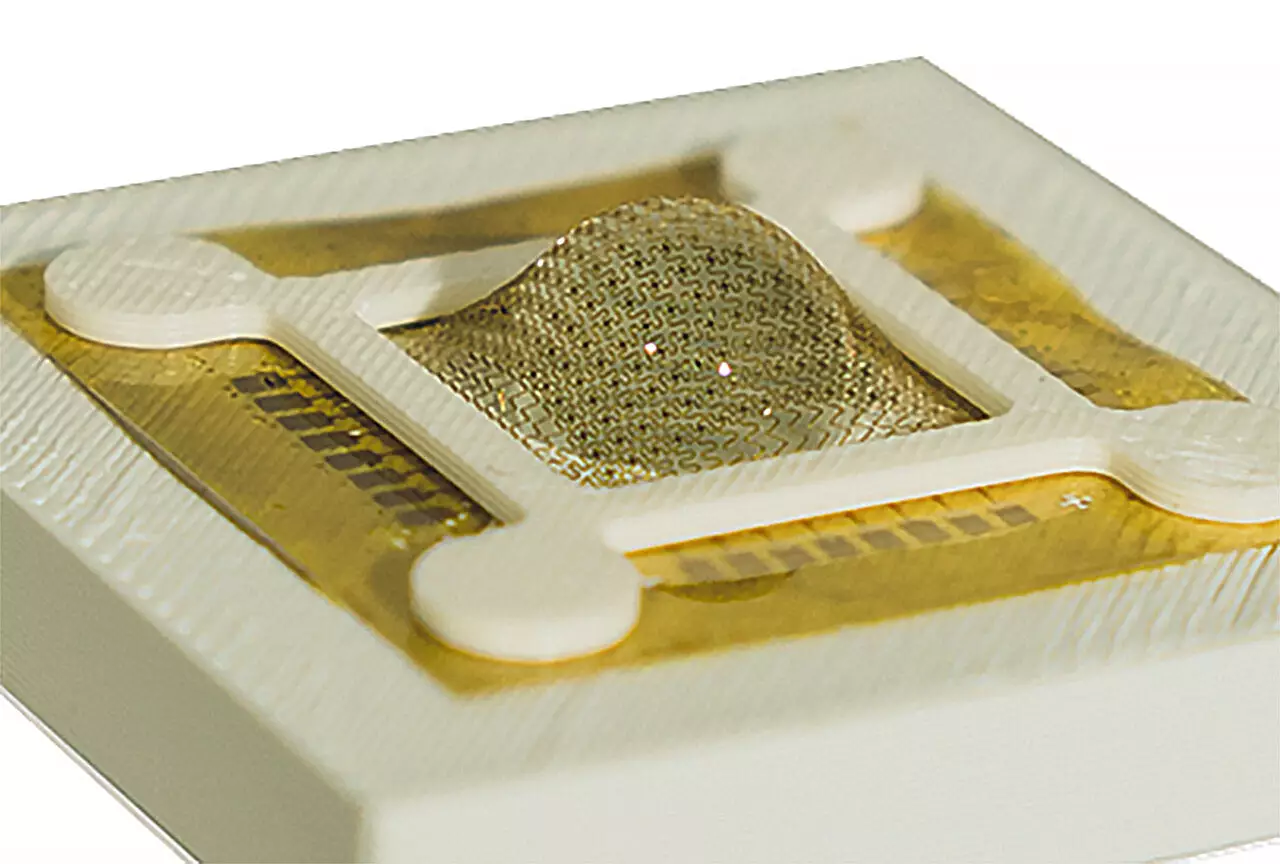

By studying the praying mantis’s unique visual system, the research team has designed artificial compound eyes that replicate its biological capabilities. These eyes integrate microlenses and multiple photodiodes, enabling the production of electrical current when exposed to light. The use of flexible semiconductor materials mimics the convex shapes and faceted positions within mantis eyes, resulting in a system that delivers precise spatial awareness in real-time.

The development of these artificial compound eyes has far-reaching implications across different industries. From low-power vehicles and drones to self-driving cars, robotic assembly systems, surveillance and security systems, and smart home devices, the ability to process visual information efficiently and accurately is crucial. The potential reduction in power consumption by more than 400 times compared to traditional visual systems is a game-changer.

Unlike traditional visual systems that rely on cloud computing, the artificial compound eyes developed by the research team can process visual information in real time. This approach minimizes the time and resource costs of data transfer and external computation, while also reducing energy usage. The integration of flexible semiconductor materials, conformal devices, in-sensor memory components, and post-processing algorithms represents a technological breakthrough in visual data processing.

The key to the success of this innovative system lies in mimicking how insects perceive the world through visual cues. By continuously monitoring changes in the scene and encoding this information into smaller data sets for processing, the artificial compound eyes replicate the sophisticated visual processing capabilities of insects like the praying mantis. The fusion of advanced materials and algorithms enables real-time, efficient, and accurate 3D spatiotemporal perception.

The work done by the research team at the University of Virginia represents a significant scientific insight into biomimetic solutions for complex visual processing challenges. By demonstrating the potential of artificial compound eyes in enhancing depth perception and spatial awareness, the research has the power to inspire engineers and scientists to explore innovative approaches to visual data processing. The future of self-driving cars and other automated systems may be shaped by biomimicry and the lessons learned from studying nature’s own solutions to visual processing.

Leave a Reply