In the fast-evolving landscape of artificial intelligence, leveraging data effectively is crucial for enterprises that seek to harness the full potential of AI. Large language models (LLMs) have emerged as powerful tools in this regard, enabling businesses to generate insights and automate customer interactions. However, the integration of both structured and unstructured data into LLMs presents significant challenges that have hindered optimal deployment. This is the context in which Retrieval Augmented Generation (RAG) strategies have gained relevance, as they offer a pathway to utilize this data effectively.

Making structured data accessible for RAG applications involves critical complexities that extend beyond simple data retrieval. Swami Sivasubramanian, Vice President of AI and Data at AWS, highlighted that successfully leveraging structured data demands organizations to engage in complex operations like converting natural language queries into intricate SQL commands. These commands must be capable of filtering, merging tables, and aggregating data from diverse sources. The typical data landscape within enterprises is often comprised of data lakes and warehouses, where data is organized but not necessarily optimized for immediate use in RAG environments.

Sivasubramanian elaborated on the necessity for organizations to understand the schema of their data thoroughly. He emphasized that creating an effective and secure RAG system requires a deeper comprehension of historical query logs and continuous adjustments to schema changes. In this context, the recent innovations by AWS were positioned as a solution to facilitate structured data retrieval, enhancing the avenue for enterprises to employ their data in advanced AI applications.

Amazon Bedrock Knowledge Bases: A Game Changer

At AWS re:Invent 2024, AWS took a decisive step forward with the launch of Amazon Bedrock Knowledge Bases. This fully managed service is designed to streamline the RAG workflow, eliminating the need for enterprises to engage in manual coding for data source integration and query management. Sivasubramanian noted that this service would automatically generate SQL queries to retrieve essential enterprise data, subsequently enriching LLM responses with relevant context.

A key advantage of the Amazon Bedrock Knowledge Bases is its adaptability—it learns from user query patterns and can accommodate changes to data schemas. This capability not only enhances the accuracy of generated AI applications but also empowers enterprises to develop more intelligent, data-driven strategies.

Integrating Knowledge Graphs for Enhanced Insight

Another critical area for improvement in enterprise AI applications is the integration of diverse data sources to build accurate, explainable RAG systems. Here, AWS’s introduction of the GraphRAG feature stands out. This capability focuses on uncovering relationships between disparate data points, addressing one of the major pain points for enterprises. Knowledge graphs, as explained by Sivasubramanian, serve as a bridge that connects various datasets, allowing organizations to glean a comprehensive perspective of their operational data.

The GraphRAG functionality within Amazon Bedrock Knowledge Bases utilizes the Amazon Neptune graph database service to construct these knowledge graphs without requiring specialized expertise from users. By doing so, the feature enhances the efficacy of generative AI applications, delivering deeper insights into customer relationships and interactions, ultimately strengthening decision-making processes.

Unstructured data remains a significant barrier for enterprises striving to implement RAG solutions. The inherent complexity of unstructured formats—such as PDFs, audio, and video—makes them less accessible and often obfuscates valuable insights. Sivasubramanian pointed out that the extraction and transformation of unstructured data are critical for maximizing its utility in AI contexts.

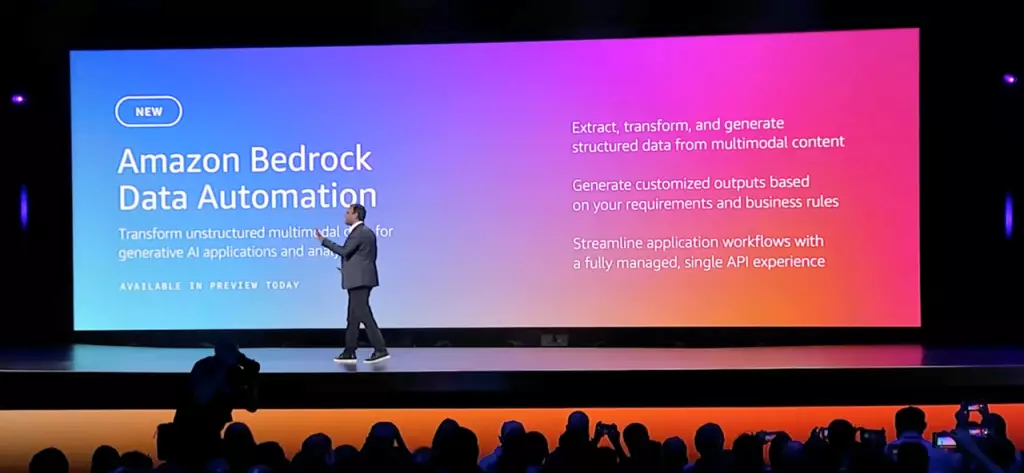

In response to these challenges, AWS has introduced Amazon Bedrock Data Automation, a groundbreaking feature that automates the transformation of unstructured multimodal content into structured formats suitable for AI applications. This innovative tool functions like an ETL (Extract, Transform, Load) process powered by generative AI, enabling enterprises to efficiently process and leverage their expansive data sets.

With a streamlined API that simplifies data integration, Amazon Bedrock Data Automation promises to significantly enhance the predictive capabilities of LLMs by ensuring that organizations can quickly transform raw content into actionable insights.

Empowering Enterprises through Data Integration

The advancements unveiled by AWS signal a turning point for enterprises looking to unlock the potential of their datasets through RAG frameworks. By addressing the complexities of structured data retrieval, harnessing the power of knowledge graphs, and automating the processing of unstructured data, AWS has established a foundation for more contextually relevant and insightful generative AI applications.

As enterprises increasingly recognize the necessity of efficient data integration, AWS’s offerings present viable solutions to overcome the multifaceted challenges posed by existing data landscapes. The result is a more empowered enterprise equipped to navigate the complexities of AI, transforming data into a strategic asset that drives innovation and growth.

Leave a Reply