In today’s digital landscape, privacy is increasingly becoming a significant concern for users who have grown weary of corporate overreach into their personal lives. Aware of this trend, Signal, a messaging platform dedicated to secure communication, has consistently championed user privacy. Recently, Signal took a notable step to protect its users by implementing a new feature, Screen Security, which aims to mitigate the privacy risks posed by Microsoft’s controversial Recall feature. While this move reflects Signal’s commitment to privacy, it also underscores the challenges that arise when powerful tech companies prioritize their innovations at the expense of user consent and security.

Countering Corporate Intrusion

The decision to introduce the Screen Security feature follows Microsoft’s rollout of its AI-driven Recall feature, which controversially captures on-screen activity. This technology, released last month and branded as Copilot+, enables users to inquire about their past actions, essentially recording their screen interactions continuously. Despite being framed as a productivity booster, the backlash was immediate. Critics highlighted potential invasions of privacy, especially given that Recall lacks robust privacy controls.

Signal’s response exemplifies the frustrations of smaller developers who find themselves navigating a landscape dominated by larger tech entities that do not consider the implications of their innovations. Without offering developers the necessary tools to restrict access to sensitive user data, Microsoft has forced third-party applications into a defensive position. Signal’s resort to implementing a Digital Rights Management (DRM) flag serves as a testament to these challenges, as it aims to prevent unauthorized screen captures—a necessity given Microsoft’s concessions to AI’s encroachment on privacy.

Screen Security and its Implications

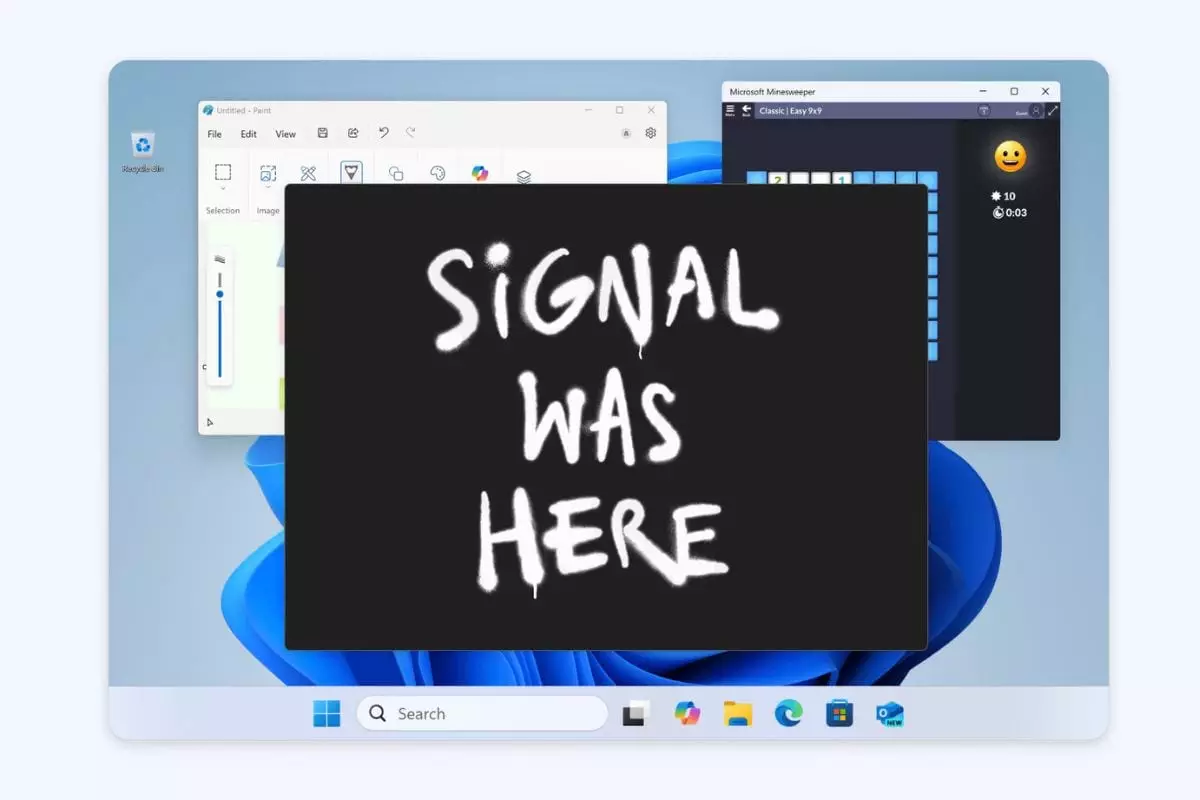

The installation of the Screen Security feature, which ensures that Windows 11 devices cannot capture screenshots of Signal’s interface, is a significant protective measure. A notable aspect of Signal’s implementation is that it will be activated by default, ensuring that all users are immediately safeguarded against potential breaches of privacy without requiring them to take action. It’s essential for users to appreciate the seriousness of such protections; after all, their chats can contain highly sensitive information.

However, amid the drive for enhanced security, there are potential drawbacks that Signal has openly acknowledged. In blocking screenshots, the feature might inadvertently hinder accessibility tools like screen readers and magnifiers. Recognizing this trade-off, Signal provides users the option to deactivate Screen Security, although they are forewarned about the implications: namely, the risk that Microsoft could exploit this opening for less private ends.

Signal’s transparency regarding the implications of turning this feature off is commendable. Still, it raises a troubling question: why should users have to toggle settings to protect their privacy in the first place? The existence of such features in the digital realm often feels like a reflection of a broader systemic issue where user rights and accessibility frequently clash with corporate ambitions.

The Bigger Picture: AI and User Rights

Signal’s bold stance serves as a call to action for the tech industry. The company’s statement urging AI development teams to consider the broader implications of features like Recall resonates strongly with a growing movement toward ethical technology practices. Privacy cannot be an afterthought—it should be foundational. As AI continues to evolve, the challenges of striking a balance between innovation and user rights become paramount. Signal is right to demand that future AI systems be developed with these considerations in mind.

Furthermore, the idea that applications like Signal should have to employ “one weird trick” to ensure user privacy raises important ethical questions. Should users be forced to accept a trade-off in accessibility to maintain control over their data? The dialogue surrounding accessibility cannot be separated from the privacy discourse, demanding a collaborative approach that values both equally.

Through this unique moment in tech history, Signal provides an innovative model for how privacy-focused companies can strategically engage with larger industry players without compromising their ethical obligations to users. As digital citizens navigate this complex landscape, the actions of organizations like Signal could empower users to insist on more stringent privacy standards and protocols from all developers, including those with significant market power like Microsoft.

Leave a Reply