In a remarkable advancement for open-source artificial intelligence, Chinese startup DeepSeek has unveiled its latest model, DeepSeek-V3, boasting 671 billion parameters. This model is accessible through Hugging Face, signifying an important milestone in the ongoing competition between open-source and proprietary AI technologies. Built upon an innovative mixture-of-experts architecture, DeepSeek-V3 effectively utilizes a smaller subset of its parameters for each task, fostering both efficiency and accuracy. With its impressive metrics, the model has surged ahead of prominent competitors, including Meta’s Llama 3.1-405B, thereby demonstrating the rapidly growing capabilities of open-source AI solutions.

As a direct evolution from its predecessor, DeepSeek-V2, this new model utilizes a multi-head latent attention (MLA) architecture. However, it’s the incorporation of the DeepSeekMoE— a mixture-of-experts framework— that truly sets DeepSeek-V3 apart. This technology only employs approximately 37 billion parameters out of the total 671 billion for each specific token, optimizing the learning process. By cleverly distributing computational workloads among a select group of expert neural networks, the model ensures that it maintains high performance without the heavy resource demands typically associated with such large models.

Furthermore, DeepSeek-V3 introduces two critical innovations: the auxiliary loss-free load-balancing strategy and the multi-token prediction (MTP) system. The load-balancing strategy dynamically optimizes resource allocation among experts, which contributes to enhanced efficiency without sacrificing the model’s overall performance. On the other hand, the MTP strategy facilitates the simultaneous prediction of multiple future tokens, dramatically accelerating the output generation speed to 60 tokens per second— a threefold increase compared to previous versions.

DeepSeek has disclosed that it trained DeepSeek-V3 on a substantial dataset comprising 14.8 trillion tokens. Remarkably, it employed groundbreaking techniques to extend the model’s context lengths, first up to 32K tokens and later to a staggering 128K tokens. This two-stage context length extension allows the model to engage with more significant amounts of data, enhancing its comprehension and output generation capabilities.

The training process included a variety of optimizations, including the FP8 mixed precision training framework and the DualPipe algorithm for efficient pipeline parallelism. These innovations allowed DeepSeek to reduce its consumption of resources dramatically, costing about $5.57 million for the entire training cycle of around 2,788,000 GPU hours. This is a stark contrast to the financial commitments often required for equivalent models, such as Llama 3.1, which reportedly required more than $500 million to develop.

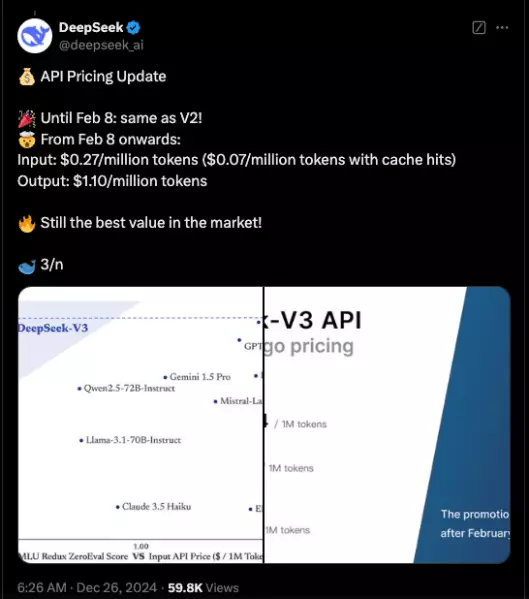

Upon rigorous evaluation, DeepSeek-V3 has demonstrated an outstanding ability to rival and, in many areas, surpass other prominent AI models in the open-source landscape. According to extensive benchmarking conducted by the company, DeepSeek-V3 outperforms leading models like Llama 3.1 and Qwen 2.5-72B, solidifying its position as the strongest open-source AI available today. In particular, the model excelled in benchmarks focused on Chinese language and mathematical tasks, showcasing scores such as 90.2 in the Math-500 test.

However, it’s essential to note that while DeepSeek-V3 has consistently outperformed many open-sourced and some proprietary models, it did face competition from Anthropic’s Claude 3.5 Sonnet in certain benchmarking tests. These observations highlight that while open-source AI is on an impressive trajectory, the ecosystem remains competitive, promoting a broader selection of options for users and businesses.

DeepSeek’s advancements signal a pivotal moment in the AI industry, as open-source models begin closing the performance gap with proprietary alternatives. The implications of this shift are profound. By democratizing access to high-performing models like DeepSeek-V3, smaller enterprises and innovative startups can harness these technologies without the prohibitive costs typically associated with proprietary systems.

Furthermore, the wide availability of robust open-source AI models contributes to a more diverse and competitive marketplace. This encourages innovation and reduces the likelihood that a single player will dominate the industry, empowering organizations to develop customized solutions tailored to their specific needs.

For developers and organizations eager to integrate DeepSeek-V3 into their current systems, the code is available on GitHub under an MIT license. Additionally, users can experiment with the model via the DeepSeek Chat platform, which mimics user interactions akin to ChatGPT, and benefit from access to APIs for commercial purposes.

DeepSeek’s release of DeepSeek-V3 marks a significant progression for open-source AI, highlighting the rapidly evolving capabilities within the field. As we move toward a future defined by artificial general intelligence, advanced models like DeepSeek-V3 serve not only as tools but also as stepping stones toward building an inclusive AI landscape.

Leave a Reply