Artificial intelligence has entered a fascinating new era. As organizations continue to innovate and develop state-of-the-art technologies, the conversation is shifting from sheer size to the significance of scale and efficiency. We have witnessed the sheer power of large language models (LLMs), boasting hundreds of billions of parameters, securing a prominent place in the tech landscape. However, as impressive as these behemoths are, a new paradigm is emerging where smaller, more specialized AI solutions—small language models (SLMs)—are claiming their rightful spot in the spotlight. This transformation in AI is not simply a trend; it’s a profound shift in how we understand and utilize these tools.

The Burden of Size: Costs and Consequences

One of the most glaring challenges that accompany LLMs is their immense computational demand. Training these models is akin to powering a small city. Google’s reported expenditure of $191 million to develop its Gemini 1.0 Ultra model not only highlights the financial demands but also emphasizes the environmental impact that comes along with such energy consumption. For perspective, a single query posed to ChatGPT consumes ten times more energy than a mere Google search. The environmental costs compound the ethical challenges we face as developers and users of technology. Amid rising concerns over climate change, aligning AI development with sustainability has become a priority.

Ironically, while many celebrate the capabilities of LLMs in tasks requiring generality and adaptability, these large models are hindered by their other requirements. Their reliance on extensive resources makes them impractical for various applications, especially for users outside of tech giants with access to vast data centers. As the AI field matures, it’s essential to reevaluate our pursuit of raw computational power.

The Beauty of Smallness: Efficiency Meets Precision

Enter small language models (SLMs)—these nimble alternatives are designed with a fraction of the parameters found in their LLM counterparts. They effectively serve specialized functions, from medical chatbots to succinctly summarizing conversations. Pioneers in the field, such as IBM, Google, Microsoft, and OpenAI, have taken thoughtful strides in developing these small models. Zico Kolter, a computer scientist at Carnegie Mellon University, underscores the effectiveness of an 8 billion-parameter model, pointing out that it can perform certain tasks exceptionally well with fewer resources.

SLMs shine in specific scenarios. Their compact design allows them to run efficiently on standard laptops and smartphones rather than requiring sprawling data centers. This portability makes AI more accessible to industry professionals, developers, and researchers, empowering them to harness AI capabilities without incurring exorbitant costs.

The Secrets Behind Building Better Small Models

Creating an effective small language model entails an understanding of training methodologies and efficient data management. Traditional LLMs often grapple with noisy data gathered from the internet which can bog down training with inconsistencies. In contrast, researchers have cleverly adapted knowledge distillation techniques, wherein large models serve as educators, providing polished, high-quality datasets for smaller models to learn from. This method is akin to mentorship, where the seasoned practitioner bestows wisdom onto the novice. The result? Small models that maintain a surprising level of efficacy despite operating with constrained resources.

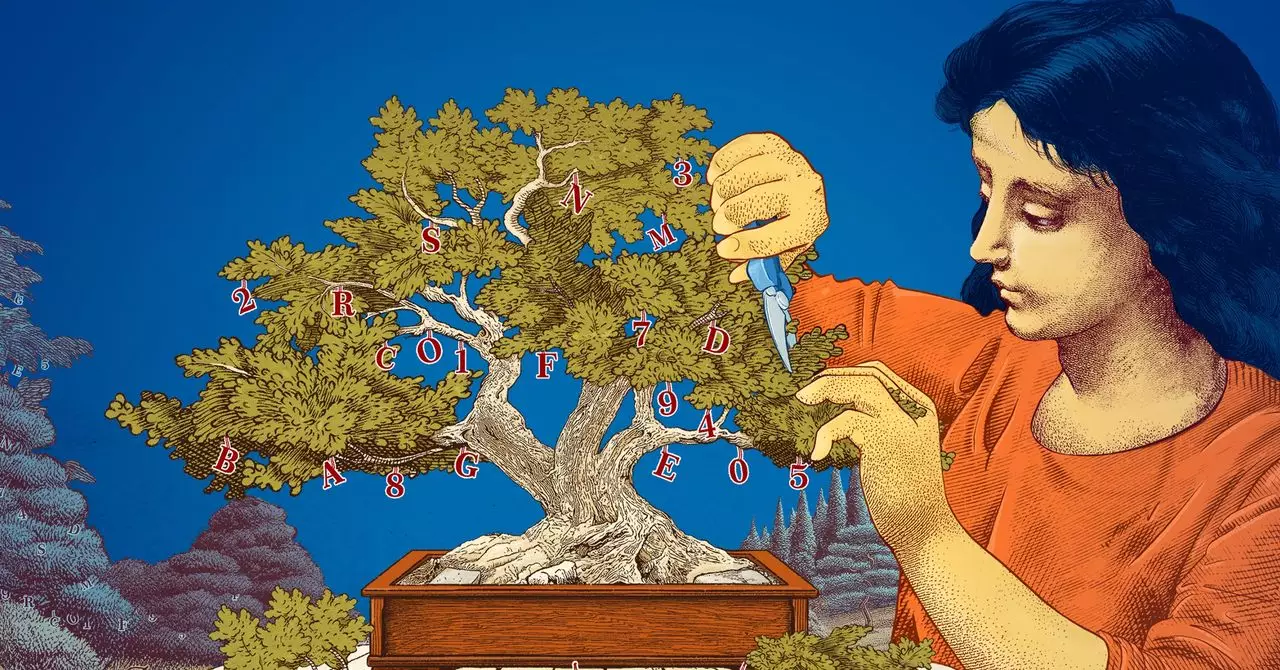

Another fascinating approach is pruning, a strategy rooted in principles observed in human cognitive efficiency. Pruning involves the strategic removal of superfluous parameters, streamlining neural networks to focus on essential connections and functions. The idea originated from early research by Yann LeCun in 1989, which noted that up to 90% of parameters could be discarded without sacrificing performance. By applying evolutionary strategies to improve model efficiency, researchers design SLMs to tackle specific tasks with remarkable agility.

Empowering Innovations Through Experimentation

Smaller models present a unique environment for academic and corporate researchers alike to experiment without fear of resource depletion. Leshem Choshen, a research scientist at MIT-IBM Watson AI Lab, emphasizes the reduced stakes involved in exploring novel ideas with SLMs. The transparent nature of smaller models often provides clarity regarding the decisions they make, helping developers glean insights into their underlying mechanisms.

As we witness the evolution of artificial intelligence, the role of smaller, targeted models is destined to grow. Their efficiency, adaptability, and lower resource consumption are not only appealing for researchers; they present a compelling case for businesses and organizations seeking to integrate AI responsibly. The future is not merely in crafting ever-larger AI systems but in understanding and embracing the art of making smarter, smaller, and more considerate choices that benefit society at large.

Leave a Reply