The technological sphere continues to face a myriad of challenges around content moderation, user engagement, and security threats. Meta, the parent company of Facebook, has taken the initiative to provide transparency in this area through its recent report detailing content violations, engagement metrics, and hacking attempts during the fourth quarter of 2024. This article delves into the key takeaways from Meta’s report, examining the implications for users, publishers, and the larger ecosystem of social media.

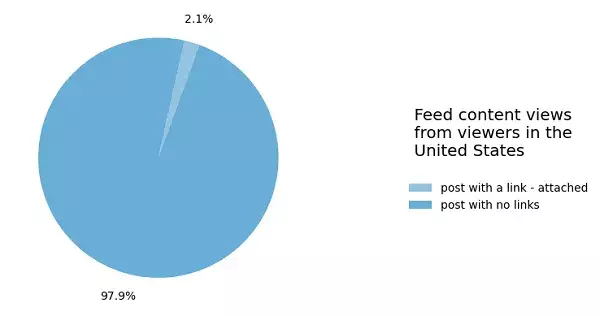

One glaring observation from the latest Widely Viewed Content report is a significant decline in engagement with external content. A staggering 97.9% of Facebook posts viewed in the U.S. during Q4 2024 did not include links leading outside the platform, marking a considerable increase from 86.5% in Q3 2021. This trend indicates that the likelihood of users being directed to external sources has plummeted, effectively making it more challenging for publishers to gain organic traffic from Facebook.

Worryingly, this shift reflects Meta’s strategic move towards a more self-contained content ecosystem. The company appears keen on funneling engagement within its applications, thus sidelining information that originates from outside sources. This detrimental shift raises a critical concern for publishers reliant on Facebook for traffic, as the once viable platform for generating referrals is morphing into a less favorable environment for external content promotion.

The types of posts that dominated Facebook engagement during the quarter mirror a growing trend toward sensationalism and the trivialization of news. Content ranging from celebrity holiday imagery to tear-jerking tales of human interest garnered significant attention. Posts such as Mark Wahlberg’s family Christmas photo or a child asking Santa to help their mother instead of seeking presents signify a shift in content that resonates most fervently with users.

This pivot toward feel-good, often saccharine content signals a move away from more serious topics, including hard news or political discussions. The wide appeal of this content underscores a societal tendency toward escapism in digital interactions—a strategy that publishers might be compelled to adopt if they wish to align with prevailing user preferences on the platform.

On the moderation front, the report presents a paradoxical scenario. While an increase in Violent & Graphic Content on Instagram was noted, Meta also announced a reduction in the estimated number of fake accounts, now reported to be around 3% of its global monthly active users. This downward revision is curious, especially given that Meta has previously indicated the figure hovered around 5%. This change could reflect improved detection systems or an opportunistic adjustment in reporting metrics.

Moreover, the company has transitioned towards a Community Notes model that eliminates third-party fact-checking while revising policies concerning hate speech. The apparent reduction in erroneous enforcement actions, although framed as a positive outcome, raises questions about whether the integrity of genuine enforcement is being compromised in favor of greater leniency.

The effectiveness of Meta’s content moderation remains uncertain. With fewer enforcement actions occurring, it is essential to scrutinize how this paradigm shift affects harmful content prevalence. Users and critics alike should be wary of this model’s potential outcomes in enabling harmful speech while minimizing erroneous penalties.

The report also outlined various low-level influence operations related to misinformation, shedding light on potential security threats originating from locations such as Benin, Ghana, and China. Particularly alarming has been the documentation of a Russian-based operation dubbed “Doppleganger,” which has shifted its focus from the U.S. to countries like Germany and France.

The information suggests that after significant geopolitical events, such as U.S. elections, foreign influence operations may recalibrate their targeting strategies. This intelligence highlights the ongoing battle against misinformation and underscores the necessity for platforms like Meta to adapt continually to emerging threats. A proactive approach toward monitoring and intervention remains essential for safeguarding the user experience.

As Meta navigates the challenging waters of content oversight and user engagement, the implications of its Q4 2024 report are multifaceted. The challenges around traffic generation for publishers, the change in user engagement patterns, and the intricate balance of moderation all indicate a complex ecosystem that demands agile responses.

Meta’s evolving policies might redefine the landscape of social media interaction, but whether these shifts will ultimately benefit or impair users and content creators remains open to interpretation. With transparency efforts in place, stakeholders must stay informed and critically evaluate the influence of such changes on content engagement and safety on the digital platform.

Leave a Reply