In the rapidly evolving field of artificial intelligence, the pursuit of precision in responses generated by large language models (LLMs) is paramount. LLMs face a formidable challenge: answering complex queries that require specialized knowledge, often leading to inaccuracies when the information falls outside their training scope. This scenario parallels a common experience in human interaction where consulting an expert can significantly enhance the reliability of the information provided. Recognizing this challenge, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have devised a striking new approach named Co-LLM, designed to integrate the strengths of both general-purpose and specialized models.

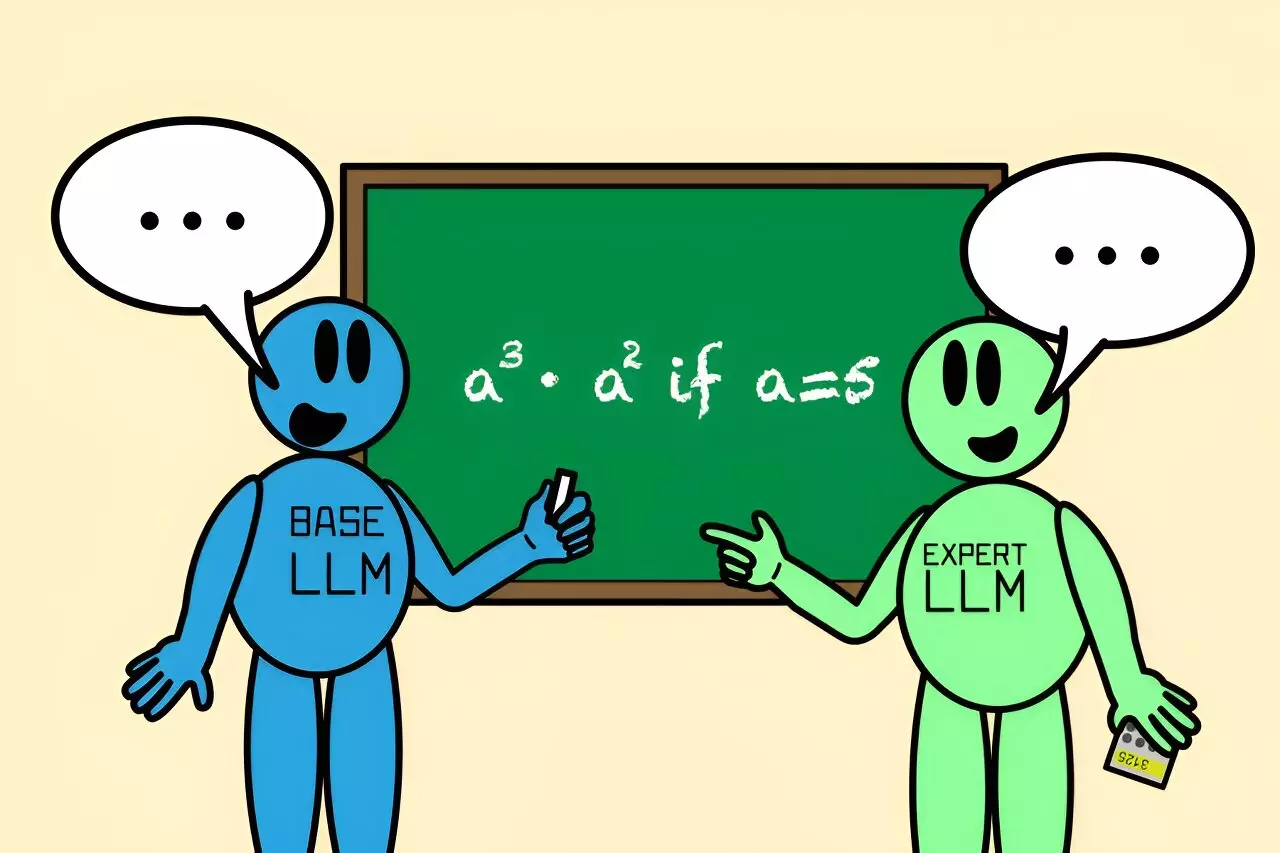

The Co-LLM framework fundamentally alters how LLMs collaborate. Instead of relying on traditional methodologies that often involve cumbersome parameter tuning or extensive datasets, Co-LLM employs an innovative algorithm to facilitate seamless interactions between a base model and a specialized counterpart. When the base LLM generates an answer, Co-LLM meticulously analyzes each word within that response to identify moments when the addition of expert insights could bolster accuracy.

This development is significant not merely for enhancing clarity in responses but also for optimizing the efficiency of the computational process. The algorithm requires the specialized model only during specific iterations, thereby conserving resources and time. A notable feature within this framework is the ‘switch variable’—a machine learning-derived element that assesses the competence of each token being generated, allowing the system to recognize when to draw upon specialized knowledge.

The algorithm’s potential shines brightly in applications spanning diverse domains, particularly in high-stakes fields like medicine and quantitative reasoning. For instance, when given the task of identifying ingredients in a prescription drug, a standalone general-purpose LLM might misreport critical details. However, with Co-LLM’s intelligent routing to a biomedical expert model, responses become more precise and reliable. This collaborative dynamic embodies the goal of enriching user experience by ensuring that answers provided are grounded in both foundational knowledge and specific expertise.

Moreover, Co-LLM showcases its beneficial nature in problem-solving scenarios. For example, when tasked with solving a mathematical expression such as “a^3 · a^2 if a=5”, a typical LLM might yield incorrect outcomes due to oversights in calculation. However, when Co-LLM enables collaboration with a specialized math model such as Llemma, the precision of the answer significantly improves.

The performance of Co-LLM has been remarkable in practical testing scenarios. Not only does it provide answers that surpass those generated by independently tuned models, but it also does so by intelligently deferring to specialized knowledge when needed, leading to impressive efficiency gains. This targeted collaboration means that Co-LLM can tackle complex queries more effectively than traditional models that rely on uniform approaches across their generating process.

One of the standout aspects of Co-LLM is its ability to learn from collaboration like a human would. By mimicking human-like decision-making processes—knowing when to “phone a friend”—the model organically improves its ability to discern when it falls short and requires external input. This contextual awareness, built through exposure to domain-specific training data, gives LLMs a new layer of sophistication.

The future of Co-LLM appears promising, with researchers contemplating enhancements that could push the boundaries further. A potential area of development involves integrating mechanisms for self-correction, allowing the model to backtrack when the specialized model fails to provide accurate information. Such a feature would allow Co-LLM to maintain reliability and enhance user trust in its outputs. Additionally, keeping expert models updated through targeted training of the base model promises real-time adaptability to new information, which is essential in rapidly changing fields.

Moreover, as businesses increasingly rely on AI for crucial tasks, Co-LLM has the potential to revolutionize how enterprise documents are created and maintained. By enabling small, tailored models to collaborate with larger LLMs while respecting data privacy concerns, organizations could vastly improve efficiency in managing internal documentation.

Co-LLM represents a significant step forward in the evolution of collaborative intelligence among LLMs. By enabling effective partnerships between general and specialized models, this novel algorithm paves the way for more accurate, reliable, and efficient automated responses. As AI continues to advance, the insights and methodologies borne from Co-LLM could well reframe how we understand expertise within artificial systems—ultimately leading to a new era of collaboration in AI, reflecting the intricacies of human problem-solving and decision-making. This evolution not only augurs well for the field of artificial intelligence but also for its practical applications across multiple sectors.

Leave a Reply