In a world increasingly driven by technology, the quest for accurate food quality assessment has taken center stage. As consumers seek optimal choices in grocery stores, the intersection of human perception and machine learning offers promising developments. The Arkansas Agricultural Experiment Station’s recent study reveals the potential to enhance computer models’ consistency in predicting food quality—specifically lettuce freshness—by harnessing human sensory evaluations. This article unpacks the study’s findings, implications for machine learning applications, and the broader impact on food presentation and quality assessment methodologies.

When faced with the task of selecting the best produce, humans instinctively use a multi-faceted approach. Their perceptions are influenced by numerous factors including, but not limited to, lighting conditions, color, and visual cues. However, this subjective assessment presents challenges, as individual responses can vary widely. The study led by Dongyi Wang at the University of Arkansas highlights these inconsistencies by demonstrating that human assessments are influenced by environmental variables that could skew results.

The research emphasizes the importance of understanding human perception as the foundation for developing advanced machine learning models. Dongyi Wang aptly noted, “When studying the reliability of machine-learning models, the first thing you need to do is evaluate the human’s reliability.” This quest to understand human biases showcases a pivotal step in refining how machines can mimic human-like evaluations when assessing food quality.

Harnessing Data to Train Machine Learning Models

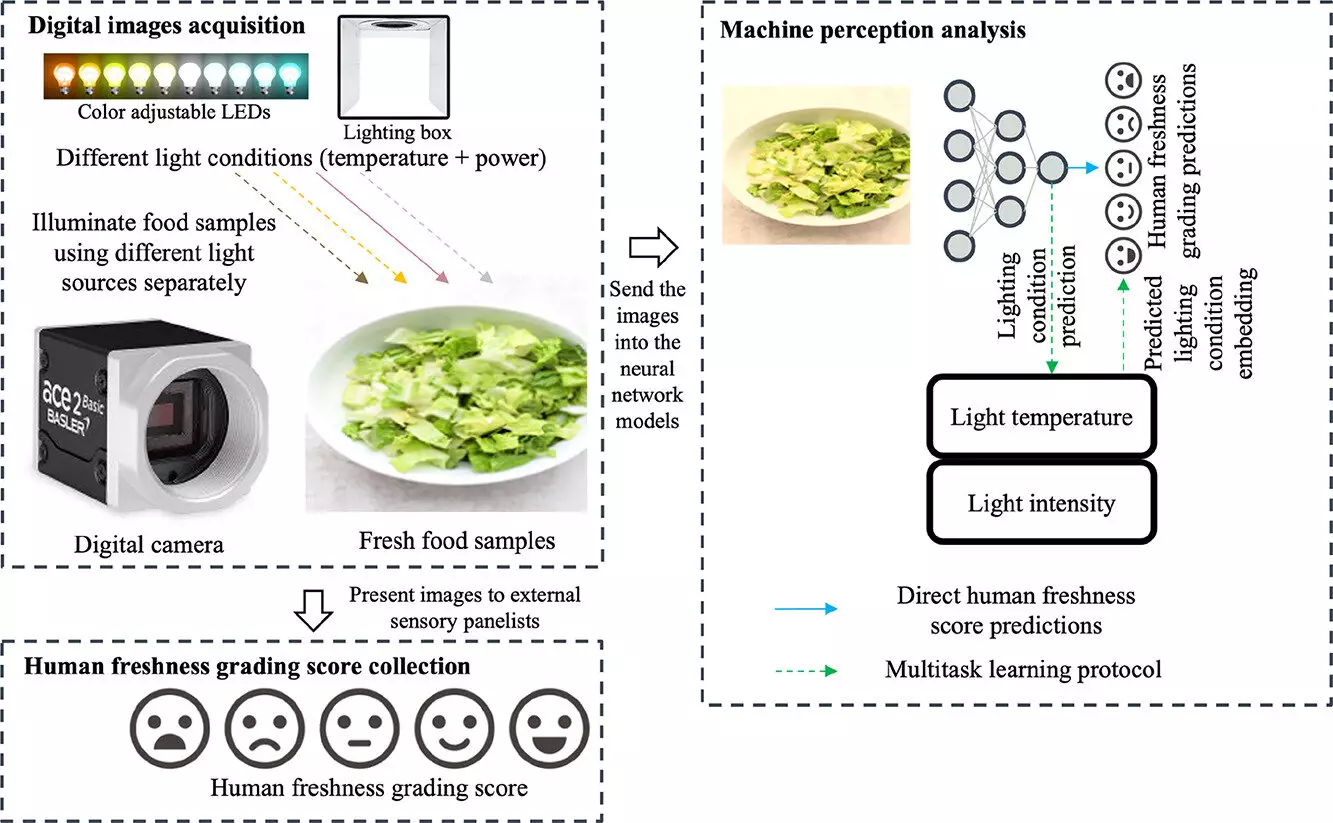

The crux of the Arkansas study revolves around utilizing data derived from human assessments to improve computer predictions. Previous algorithms have largely focused on static characteristics of food items, applying simple color parameters without accounting for the variability introduced by lighting. This research fills that gap by examining how various lighting conditions affect human perception of lettuce freshness.

A comprehensive sensory evaluation conducted with 109 participants formed the backbone of the dataset. Participants were asked to grade the freshness of lettuce images captured under varied lighting conditions over a span of several days. This meticulous process generated a robust dataset of 675 images, which served as the groundwork for training machine learning models. The result revealed that incorporating human perception data could reduce computer prediction errors by approximately 20%, a significant improvement that underscores the importance of integrating human insight into machine learning frameworks.

This advancement bears significant implications not only for consumer-facing applications but also for food processors. By optimizing machine vision systems with insights derived from human perception, food manufacturers can enhance quality control measures. Such improvements can streamline production processes, mitigate waste, and ultimately lead to higher consumer satisfaction rates.

Moreover, the study signals a paradigm shift in how grocery stores and food processors might approach the presentation of products. By using insights from sensory evaluations, businesses can strategically display produce to optimize its appeal, potentially increasing sales and consumer trust. For instance, leveraging the knowledge that different lighting conditions can improve the perceived freshness of merchandise might lead to new merchandising approaches for products like leafy greens.

While the study primarily focuses on lettuce, its methodologies and insights hint at endless possibilities across various sectors. The training techniques developed could extend to other products, including jewelry, cosmetics, and textiles. Any industry that relies heavily on visual appeal stands to benefit from merging human sensory insights with machine learning technologies.

As researchers explore these domains, the potential applications can impact marketing strategies and product design, leading to tailored experiences that resonate with consumers’ innate preferences.

The Arkansas Agricultural Experiment Station’s research is a critical step toward enhancing machine learning models in food quality assessment. By recognizing and accounting for human biases in perception, the study illuminates a pathway towards more accurate and reliable predictions. As we navigate a food landscape increasingly influenced by technology, the fusion of human insight and machine learning stands to reshape how consumers engage with food products and make informed choices in an age where aesthetics meet science. This harmonious integration of disciplines presents exciting opportunities and challenges but ultimately promises a brighter future for enhancing food quality evaluation.

Leave a Reply