Deep learning has transformed numerous industries, proving invaluable in fields ranging from healthcare to finance. With its extensive computational requirements, however, the reliance on powerful cloud infrastructure raises significant concerns regarding data protection, especially when sensitive information, like medical records, is involved. MIT researchers have introduced a novel security protocol that harnesses quantum mechanics to ensure that data sent during deep-learning operations remains confidential, thus addressing these pressing security concerns.

As businesses increasingly adopt advanced AI-driven solutions, there arises a critical need for secure data transfer between clients and cloud servers. In healthcare, for example, hospitals grapple with the dilemma of utilizing AI tools to analyze sensitive patient data due to stringent privacy regulations. Attackers can easily exploit traditional digital computation methods to intercept or replicate data sent between systems, further exacerbating the need for robust security mechanisms. This situation has driven researchers to seek solutions that can effectively secure sensitive data without sacrificing the performance of deep-learning models.

MIT’s latest security protocol takes advantage of a fundamental principle of quantum mechanics: the no-cloning principle, which states that it is impossible to create an identical copy of an arbitrary unknown quantum state. By encoding information within the photons (light particles) used in optical communications, the researchers ensure that any attempt to eavesdrop on the data will be detectable. The protocol captures the weights of neural networks in these optical fields, allowing operations to be executed with high levels of accuracy while simultaneously preserving data integrity.

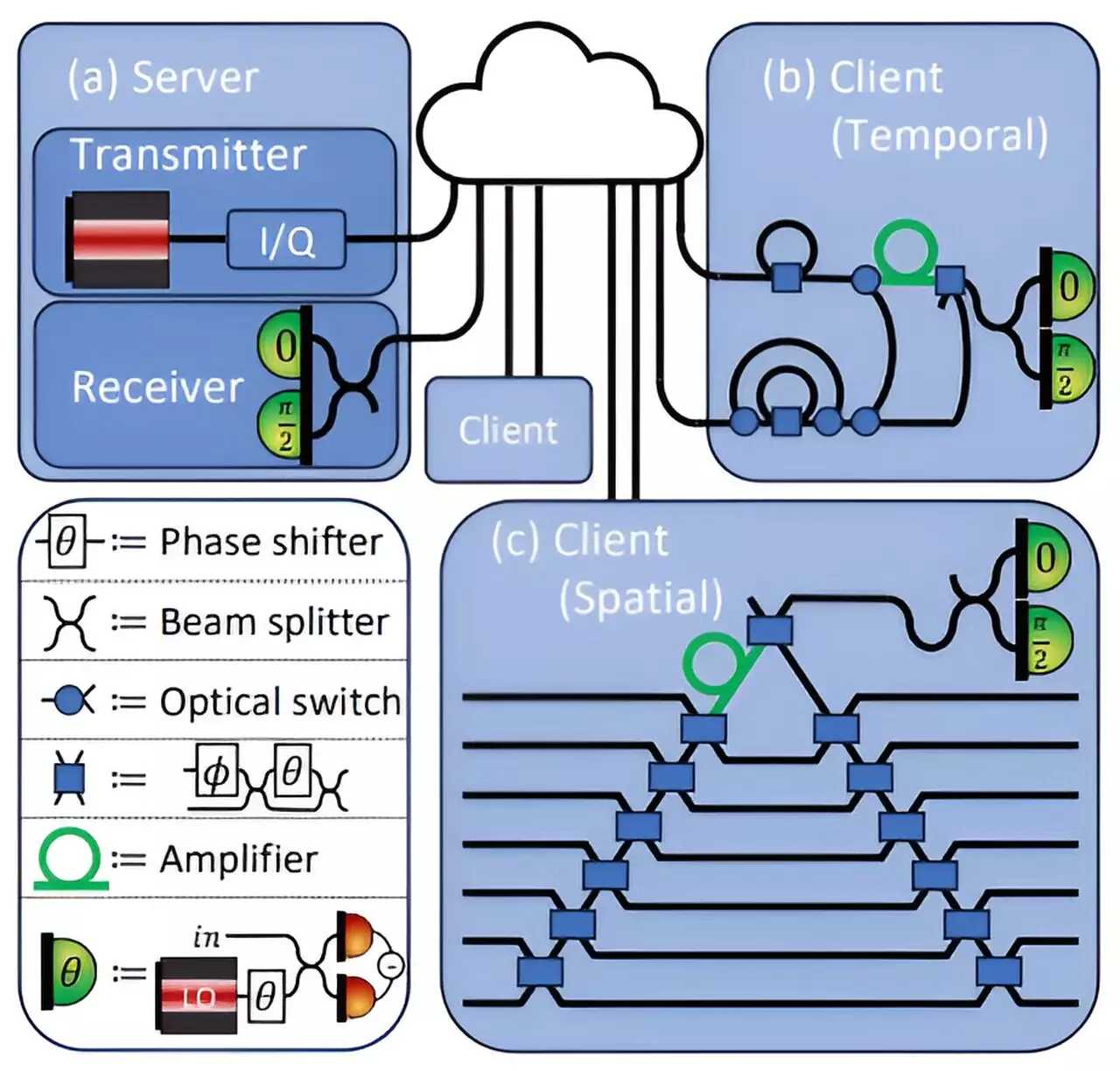

In this innovative security framework, there are two primary actors: a client with sensitive data, such as medical images, and a central server responsible for executing deep-learning models. To generate meaningful predictions—like determining whether a patient exhibits signs of cancer—the client must transmit potentially revealing data to the server. However, this protocol guarantees that the client’s data remains protected throughout the interaction.

The process is structured such that the client receives encoded weights from the server, enabling it to run deep learning models while retaining its proprietary information. The unique nature of quantum light means the client cannot simply copy this information; instead, it can only measure results specific to its computations. After making its predictions, the client sends residual light back to the server for validation, thus maintaining a secure channel for communication.

During extensive testing, the proposed security protocol successfully managed to uphold deep-learning models’ accuracy to 96%. While there are always some minor leaks in information—quantified as under 10% for the client and about 1% for the server—the researchers have demonstrated the protocol’s ability to maintain confidentiality throughout the data exchange process. It effectively balances accessibility and protection, enabling the utility of deep learning in privacy-centric applications.

The capacities offered by this protocol extend beyond merely protecting sensitive medical data. The approach has implications for various sectors where data privacy is paramount, including finance and personal communications. By eliminating the risks associated with traditional data interception methods, this protocol could pave the way for secure distributed machine learning, where collaborative insights can be drawn from multiple data sources without jeopardizing privacy.

The versatility of this quantum-based protocol provides exciting prospects for future innovations. The researchers intend to explore potential applications in federated learning systems, where numerous entities collaborate to train central deep-learning models. Integrating quantum mechanics with AI could yield methods that not only enhance security but also improve accuracy across distributed platforms.

Collaborators involved in this research have highlighted the crossroads between deep learning and quantum key distribution, which traditionally remain separate fields. By merging insights and methodologies from both areas, the potential for innovative solutions that bolster data privacy is enormous. Furthermore, this integration of disparate fields challenges existing paradigms and inspires new approaches to solve contemporary privacy issues.

As reliance on deep learning continues to expand, so too does the necessity for secure data practices. The development of this protocol exemplifies the potential of quantum technology to redefine data security standards in cloud-based neural networks. Researchers are hopeful that future applications will continue to enhance data protection and privacy in an increasingly interconnected world, ushering in a new era of secure artificial intelligence.

Leave a Reply