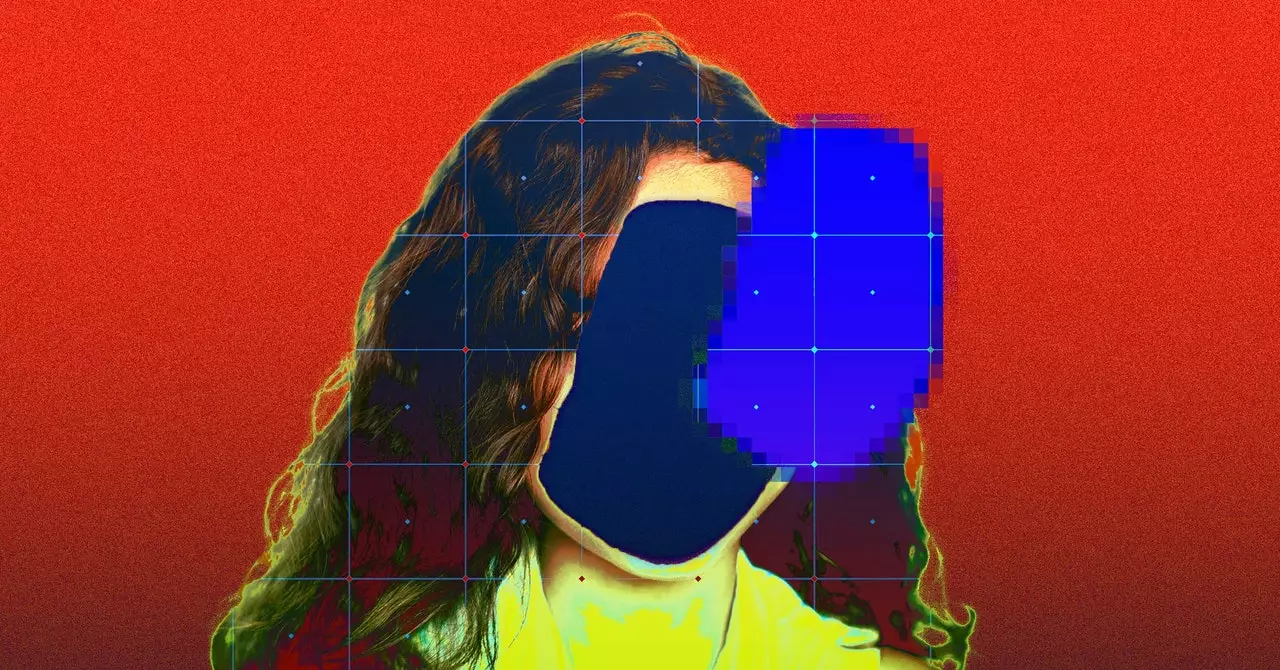

The issue of privacy violations and data scraping has once again come into the spotlight with the recent revelation that over 170 images and personal details of children from Brazil have been used without their knowledge or consent to train AI models. These images, spanning from the mid-1990s to as recently as 2023, have been included in the LAION-5B dataset, a repository of training data for AI startups. This egregious violation of privacy raises serious concerns about the ethical use of personal data, particularly when it comes to vulnerable populations, such as children.

The use of children’s images in AI training without their consent not only violates their privacy but also exposes them to potential risks and harm. According to Human Rights Watch, the technology developed using these images can create realistic imagery of children, putting them at risk of exploitation by malicious actors. This breach of privacy is further exacerbated by the fact that the images were scraped from personal blogs and videos, where the children and their families believed they were sharing content in a private, safe space.

In response to the discovery of these privacy violations, LAION, the organization behind the dataset, has taken steps to remove the images in question. However, the fact that these images were used in the first place raises questions about the legality of data scraping and the responsibility of organizations to uphold privacy rights. YouTube, one of the platforms where the images were sourced from, has also reiterated its terms of service, which prohibit unauthorized scraping of content.

The ethical implications of using children’s images in AI training go beyond privacy violations and raise concerns about the potential misuse of such technology. The rise of deepfakes, particularly those involving explicit content or harmful behavior, poses a significant threat to children and their safety. Furthermore, the possibility of sensitive information, such as locations or medical data, being exposed through these images underscores the need for stronger regulations and safeguards in the use of AI technology.

The unauthorized use of children’s images in AI training datasets is a serious violation of their privacy rights and has far-reaching implications for their safety and well-being. It is imperative for organizations and researchers to uphold ethical standards and ensure that data scraping is done with the consent and protection of individuals, especially vulnerable populations like children. As technology continues to advance, it is crucial to prioritize the protection of privacy and data security to prevent further breaches and harm to those whose images are used without their knowledge or consent.

Leave a Reply